interpretable machine learning with python serg mass pdf

Discover how to make machine learning models transparent and actionable with this essential guide. Free PDF download!

Interpretable Machine Learning with Python by Serg Masis focuses on creating transparent and explainable models․ It addresses the challenge of making complex AI algorithms understandable, ensuring trust and accountability in machine learning systems․ The book provides practical techniques and tools to build high-performance, interpretable models, leveraging Python’s powerful libraries like LIME and SHAP․ This approach is crucial for real-world applications, enabling data scientists to decode model decisions and ensure fairness and reliability in AI systems․

Overview of the Book by Serg Masis

Serg Masis’s Interpretable Machine Learning with Python offers a comprehensive guide to building transparent and explainable models․ The book covers key concepts, challenges, and techniques for making machine learning algorithms more understandable․ It provides hands-on examples and real-world applications, enabling readers to implement interpretable models effectively․ The text emphasizes the importance of trust and accountability in AI systems, making it essential for data scientists and developers․ The second edition expands on interpretable deep learning and includes practical tools like LIME and SHAP․ This resource is structured to cater to both beginners and advanced practitioners, offering a detailed yet accessible approach to creating reliable and fair machine learning models․

Importance of Interpretable Machine Learning

Interpretable Machine Learning (IML) is crucial for building trust and accountability in AI systems․ By making complex models transparent, IML ensures stakeholders understand and trust model decisions․ This is vital in sensitive domains like healthcare and finance, where explainability is legally required․ Serg Masis’s book emphasizes that interpretable models not only improve decision-making but also enable identification of biases and errors; In real-world applications, IML fosters collaboration between data scientists and domain experts, leading to better outcomes․ As AI becomes pervasive, the demand for interpretable models grows, making IML a cornerstone of responsible and ethical machine learning practices․

Key Concepts and Challenges

Key concepts in interpretable machine learning include transparency, explainability, and model simplicity․ Techniques like feature importance and model-agnostic methods are essential for understanding complex algorithms․ Challenges arise from balancing accuracy and interpretability, as simpler models may sacrifice performance․ Serg Masis’s work highlights overcoming these challenges through robust tools and methodologies․ Ensuring fairness and avoiding bias are also critical, requiring careful model auditing․ Addressing these challenges is fundamental to advancing reliable and trustworthy AI systems․ By mastering these concepts, practitioners can build models that are both high-performing and interpretable, meeting ethical and practical demands across industries․

The Structure of the Book

Interpretable Machine Learning with Python is organized into four parts, covering fundamentals, techniques, model-agnostic methods, and real-world applications, providing a comprehensive learning path for practitioners․

Part 1: Fundamentals of Machine Learning Interpretability

This section introduces the core concepts of interpretable machine learning, focusing on the importance of transparency in model decisions․ It explores the basics of machine learning interpretability, including key challenges and techniques to address them․ Readers learn about the trade-offs between model complexity and interpretability, as well as the essential metrics for evaluating model explanations․ The section also covers foundational methods for understanding feature importance and model behavior, setting the stage for more advanced techniques in later chapters․ By emphasizing practical approaches, this part equips learners with the skills to build and interpret models effectively, ensuring trust and reliability in real-world applications․

Part 2: Techniques for Building Interpretable Models

This section delves into practical methods for constructing interpretable models, emphasizing techniques that balance accuracy and transparency․ It explores model-agnostic approaches, such as feature importance analysis and partial dependence plots, which can be applied to any machine learning algorithm․ Readers learn how to implement interpretable models using Python libraries like LIME and SHAP, which provide insights into model decisions․ The chapter also covers intrinsic interpretability methods, such as linear models and decision trees, and discusses their advantages in real-world scenarios․ By focusing on actionable strategies, this part enables developers to build models that are both high-performing and easy to understand, fostering trust and usability in machine learning applications․

Part 3: Model-Agnostic Interpretability Methods

This section focuses on techniques that can be applied to any machine learning model to enhance interpretability․ Model-agnostic methods, such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations), are explored in detail․ These approaches provide insights into feature contributions and local model behavior, making complex models more transparent․ The chapter also covers partial dependence plots and feature importance analysis, which help visualize how models make predictions․ By leveraging these tools, developers can understand and explain the decisions of even the most sophisticated algorithms, ensuring trust and compliance in real-world applications․ These methods are particularly useful for bridging the gap between model complexity and human understanding, making them indispensable in modern machine learning workflows․

Part 4: Case Studies and Real-World Applications

This section delves into practical examples of implementing interpretable machine learning in various industries․ Real-world case studies demonstrate how to apply techniques like SHAP and LIME to understand model decisions in healthcare, finance, and other domains․ The book provides hands-on examples, such as predicting patient outcomes or credit risk assessment, to illustrate the importance of transparency in high-stakes environments․ Readers learn how to deploy interpretable models that balance performance with explainability, addressing challenges like regulatory compliance and user trust․ These case studies highlight the practical benefits of interpretable machine learning, showcasing its value in solving real-world problems and ensuring ethical, accountable AI systems․

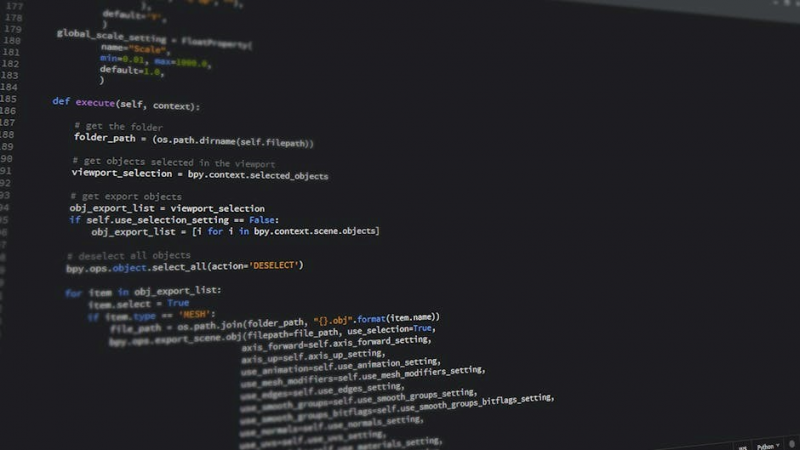

Tools and Libraries for Interpretable Machine Learning in Python

Python offers powerful libraries like LIME, SHAP, and Anchors for model interpretability․ These tools help create transparent and explainable models, enhancing trust in AI systems․

Popular Libraries: LIME, SHAP, and Anchors

Python’s ecosystem includes powerful libraries like LIME, SHAP, and Anchors, which are essential for interpretable machine learning․ LIME (Local Interpretable Model-agnostic Explanations) generates local explanations for individual predictions, making complex models understandable․ SHAP (SHapley Additive exPlanations) assigns feature importance scores, ensuring transparency in model decisions․ Anchors provides rule-based explanations, offering clear and actionable insights․ These libraries are widely adopted due to their ability to simplify model interpretability without compromising performance․ They are particularly useful for explaining black-box models, enabling data scientists to trust and validate their systems․ By integrating these tools, developers can build models that are both accurate and interpretable, aligning with ethical AI practices․

Python Tools for Model Interpretability

Python offers a variety of tools to enhance model interpretability, ensuring transparency in machine learning systems․ Scikit-learn provides features like permutation importance and partial dependence plots to analyze model behavior․ TensorFlow includes TensorBoard for visualizing model performance and the What-If Tool for exploring fairness metrics․ PyTorch supports tools like Captum for model explainability, enabling insights into feature contributions․ These libraries empower developers to build interpretable models, fostering trust and accountability in AI systems․ By leveraging these tools, data scientists can decode complex models, ensuring ethical and reliable outcomes in real-world applications․ This aligns with the principles of explainable AI, making machine learning more accessible and trustworthy․

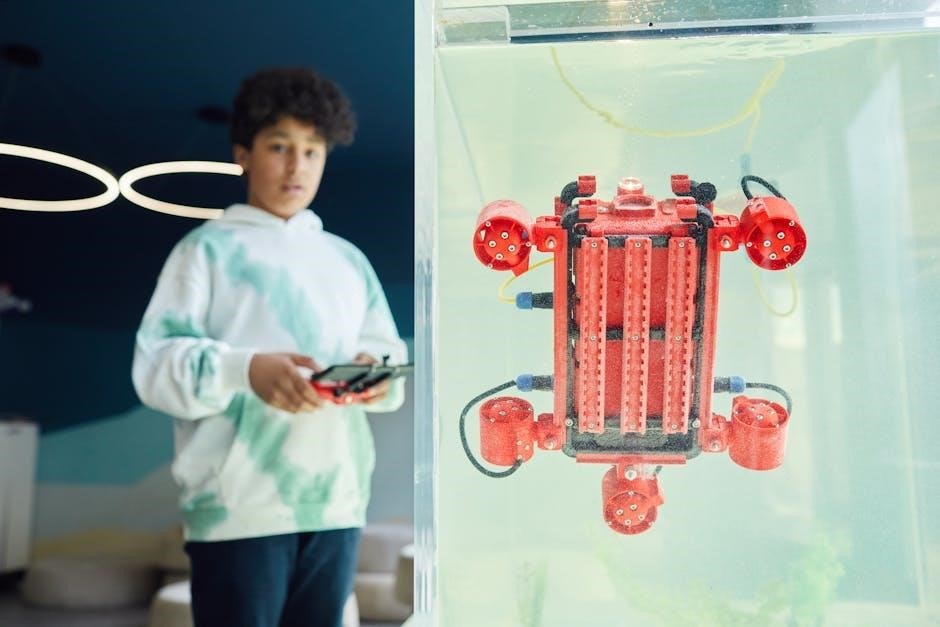

Real-World Applications of Interpretable Machine Learning

Interpretable ML is crucial in healthcare for trustworthy diagnosis and personalized treatment plans․ In finance, it ensures transparent credit risk assessments and fair fraud detection systems․

Healthcare and Medicine

Interpretable machine learning is vital in healthcare for building trustworthy models that aid in diagnosis, treatment planning, and patient outcomes․ By ensuring transparency, these models help clinicians understand predictions, such as disease risk or optimal therapies․ Tools like LIME and SHAP enable detailed explanations of complex algorithms, fostering trust in AI-driven medical decisions․ This approach is crucial for ethical compliance and accountability in sensitive healthcare applications․

Finance and Banking

Interpretable machine learning is essential in finance for ensuring transparency in critical decisions, such as credit risk assessment, fraud detection, and portfolio management․ By providing clear explanations of model predictions, techniques like SHAP and LIME help banks and financial institutions comply with regulations and build trust with stakeholders․ These tools enable professionals to understand how algorithms make decisions, such as predicting loan defaults or identifying fraudulent transactions․ This transparency is crucial for maintaining accountability and fairness in high-stakes financial applications․ Additionally, interpretable models help organizations meet regulatory requirements, such as GDPR and Dodd-Frank, by providing audit trails and justifiable decisions․

Challenges and Limitations

Complex models like deep learning pose significant interpretation challenges, while ensuring fairness and transparency remains difficult, requiring careful balancing of accuracy and explainability in AI systems․

Complexity of Modern Machine Learning Models

The increasing complexity of machine learning models, particularly deep learning architectures, poses significant challenges for interpretability․ Modern models, such as neural networks, often act as “black boxes,” making it difficult to understand their decision-making processes․ While these models achieve high accuracy, their intricate structures hinder transparency, complicating efforts to explain predictions to stakeholders․ Techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are employed to uncover feature contributions, but even these methods struggle with highly nonlinear and layered models․ Balancing model performance with interpretability remains a critical challenge, requiring careful selection of techniques to ensure both accuracy and transparency in real-world applications․

Bias and Fairness in Interpretable Models

Ensuring bias mitigation and fairness in interpretable machine learning models is crucial for ethical AI․ Modern algorithms often unintentionally reflect biases present in training data, leading to discriminatory outcomes․ Techniques like SHAP and LIME help identify biased feature contributions, enabling developers to address such issues․ Fairness metrics, such as equality of opportunity, are used to evaluate model performance across different groups․ However, balancing accuracy with fairness remains challenging, as reducing bias may sometimes compromise model performance․ Interpretable models play a key role in detecting and mitigating these biases, fostering trust and accountability in AI systems․ Addressing these issues is essential for developing responsible and inclusive machine learning solutions;

The Future of Interpretable Machine Learning

The future of interpretable machine learning lies in advancing techniques that balance model performance with transparency․ Emerging trends include enhanced model-agnostic methods and improved feature attribution tools, enabling deeper insights into complex algorithms while maintaining accuracy․ Python’s robust ecosystem, with libraries like SHAP and LIME, will continue to drive innovation, making interpretable models more accessible and scalable across industries․ As AI adoption grows, the demand for trustworthy and explainable systems will propel further advancements, ensuring machine learning remains both powerful and accountable․

Emerging Trends and Techniques

Emerging trends in interpretable machine learning include advanced model-agnostic interpretability methods and integrations with deep learning frameworks․ Techniques like SHAP and LIME continue to evolve, offering improved feature attribution and model transparency․ Innovations in neural network interpretability, such as attention mechanisms and explainable deep learning, are gaining traction․ Additionally, there is a growing focus on developing hybrid models that balance complexity with interpretability․ These advancements are supported by Python libraries, enabling researchers to implement cutting-edge, interpretable solutions efficiently․ As machine learning becomes more pervasive, the development of new techniques ensures that models remain both powerful and understandable, fostering trust in AI systems across industries․

The Role of Python in Advancing Interpretability

Python plays a pivotal role in advancing interpretable machine learning through its extensive libraries and frameworks․ Libraries like LIME, SHAP, and Anchors provide robust tools for model interpretability, enabling developers to understand and explain complex models․ Python’s simplicity and flexibility make it an ideal choice for implementing interpretable techniques, as highlighted in Serg Masis’s book․ The language’s vast ecosystem, including Pandas for data manipulation and Matplotlib for visualization, supports the entire workflow of building and explaining models․ Additionally, Python’s integration with deep learning frameworks like TensorFlow and PyTorch facilitates the development of interpretable models in advanced AI applications․ Its community-driven approach ensures continuous innovation, making Python a cornerstone in the pursuit of transparent and trustworthy machine learning systems․